When working with SQL Server, you are going to be using TempDB; that is a certainty.

Using temporary tables, however, isn’t a certainty.

I’m taking it for granted that at one time or another you have used them but it’s not something I can say for certain.

…if you aren’t using temporary tables AT ALL, please let me know what you’re using SQL Server for. I’ll either be impressed or shocked!…more than likely shocked…

However, one of the features that I find with temporary tables is that they inherit the identity from whatever table they were created from, and more often than not, this is not the identity that you want them to have.

…I mean if we wanted them to have that identity, we would have just used the base table, right?…

Now I have ways around this that I’ll blog about later on .

However, while working with these temporary tables and their identites, I found something that I just can’t explain yet and thought I would blog about it so when I do figure it out I can look back at this and call myself all kinds of names for being so stupid.

So first of all, let’s set up our base table:

USE tempdb; GO -- Create our base table CREATE TABLE dbo.A (A_ID INT IDENTITY(1, 1), x1 INT, noise1 int DEFAULT 1, noise2 char(1) DEFAULT 'S', noise3 date DEFAULT GETUTCDATE(), noise4 bit DEFAULT 0); -- Create random data between the range of [0-3] INSERT INTO dbo.A(x1) SELECT s1000.n FROM ( SELECT TOP (10) n = 1 FROM sys.columns) AS s10 -- 10 CROSS JOIN ( SELECT TOP (10) n = 1 FROM sys.columns) AS s100 -- 10 * 10 CROSS JOIN ( SELECT TOP (10) n = ABS(CHECKSUM(NEWID())) % 4 FROM sys.columns) AS s1000; -- 100 * 10 SELECT * FROM dbo.A;

Random-ish results but should be something like this:

Now, SQL Server has a useful little Database Engine Stored Procedure called sp_help that, along with a plethora of other useful information, can return a result set on identity.

If we query our table, we would get the following results:

EXECUTE sp_help 'dbo.A';

What this is telling us is that:

- Our Identity column is [A_ID] (identity),

- Our Identity value starts at 1 (seed), and

- It goes up by 1 (increment) each time.

…little tip: check out Tools -> Options -> Keyboard -> Query Shortcuts… you can just highlight a table and hit “Alt + F1” and you have the same results as running the above query…Efficiency for the win!…

which is great if we wanted to know what we started off with, but what about looking into the future? What about knowing what’s going to get inserted next?

Well for the identity column, we can!

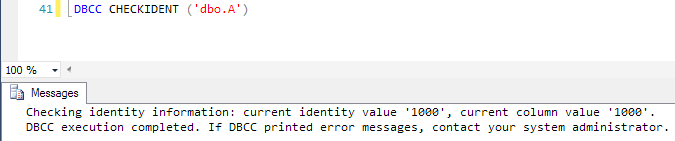

DBCC CHECKIDENT(), passing in your table name.

NOTE: I’ve just given you a potentially dangerous command as it has some optimal parameters that can break stuff. Do me a favour and make sure you’re not on Production.

…see the above? How it’s in bold? Do me a favour and double check you’re not on Production? Cheers!…

so we’ve looked into the future and we can tell that with sp_help and DBCC CHECKIDENT, our future identity will be:

DBCC CHECKIDENT().current identity value + sp_help.increment

Now say that part of our query is only interested in rows where x1 = 1.

The most basic way to create our temporary table with this information is probably the one that the majority would use:

SELECT * INTO #temp FROM dbo.A WHERE x1 = 1; SELECT * FROM #temp;

…Nice, simple, easy. 3 things that we want….

And if we were to ALT+ F1 #temp we would get the following:

…Same identity!…

So with the same identity, we have the same current identity value, right?

DBCC CHECKIDENT('#temp')

…WRONG!…

Now I like this.

I appreciate this.

I’m thankful for this.

But I’m not sure why exactly this happens, apart from SQL Server is smart enough to know that it’s not going to need the exact same current identity value for this new table.

Using a (slightly depreciated) command

SET STATISTICS PROFILE ON; SELECT * INTO #temp FROM dbo.A WHERE x1 = 1;

..it seems like SQL Server is changing the identity for you…

Now just to make this even more confusing, we run the following which for me shows that the last x1 value is 2.

SELECT * FROM dbo.A ORDER BY A_ID DESC;

So what I’m expecting is that if we were to input this into a temporary table, we wouldn’t expect to see that setidentity…-7.

SET STATISTICS PROFILE ON; SELECT * INTO #temp2 FROM dbo.A WHERE x1 = 2;

…But it’s there!…

Fine, okay so, just means that our current identity value has been changed!

So we run our DBCC CHECKIDENT and…

DBCC CHECKIDENT ('#temp2')

No change.

And that’s my problem!

I don’t have an answer for this at the moment but when I figure this out, I’ll let you know, and if anyone has the answer, feel free to share 🙂

But at least I have a partial answer:

Question: When subtracting 7 from a value equal no change?

Answer: When SQL Server is involved.